Deploy and Scale

In the previous steps, we started a developer loop, added two interfaces (wasi:logging and wasi:keyvalue), and extended our application with pluggable capabilities.

Now we'll learn how to:

- Declaratively deploy an application in a local wasmCloud environment

- Scale an application to handle multiple parallel requests

- Distribute an application globally

- Use the wasmCloud dashboard

This tutorial assumes you're following directly from the previous steps. Make sure to complete Quickstart, Add Features, and Customize and Extend first.

Declaratively deploying the application

We will continue to use the Redis key-value capability provider as in the Customize and Extend step, but this time we will specify the provider using a declarative application manifest written in YAML, much like a Kubernetes manifest.

This .yaml file defines the relationships between the components and providers that make up our application, along with other important configuration details. It is conventionally named wadm.yaml after the wasmCloud Application Deployment Manager that handles scheduling.

We can modify our wadm.yaml to include the Redis provider.

Open wadm.yaml in your code editor and make the changes below.

apiVersion: core.oam.dev/v1beta1

kind: Application

metadata:

name: hello-world

annotations:

description: 'HTTP hello world demo'

spec:

components:

- name: http-component

type: component

properties:

# Your manifest will point to the path of the built component; you can also

# start published components from OCI registries

image: file://./build/http_hello_world_s.wasm

traits:

- type: spreadscaler

properties:

instances: 1

# The new key-value link configuration #

- type: link

properties:

target: kvredis

namespace: wasi

package: keyvalue

interfaces: [atomics, store]

target_config:

- name: redis-url

properties:

url: redis://127.0.0.1:6379

# The new capability provider #

- name: kvredis

type: capability

properties:

image: ghcr.io/wasmcloud/keyvalue-redis:0.28.2

- name: httpserver

type: capability

properties:

image: ghcr.io/wasmcloud/http-server:0.26.0

traits:

- type: link

properties:

target: http-component

namespace: wasi

package: http

interfaces: [incoming-handler]

source_config:

- name: default-http

properties:

address: 0.0.0.0:8000kvredisis defined as acapabilityprovider and fetched from an OCI image.http-componentconnects tokvredisvia a runtimelink.- The link definition specifies the interface and configuration through which the component and provider will communicate.

Save your changes to wadm.yaml.

Scaling up 📈

So far, our application can only handle a single request at a time. This is because only a single instance is defined for it in the application manifest as a property of the spreadscaler:

apiVersion: core.oam.dev/v1beta1

kind: Application

metadata:

name: hello-world

annotations:

description: 'HTTP hello world demo'

spec:

components:

- name: http-component

type: component

properties:

image: file://./build/http_hello_world_s.wasm

traits:

- type: spreadscaler

properties:

instances: 1

...Following the specification in the manifest, wasmCloud instructs the host's WebAssembly runtime (wasmtime) to instantiate only a single instance of our component at any time to process incoming requests. As a result, requests received at the same time are processed sequentially, one after the other.

Let's try adding a simple sleep to the handler to simulate a longer processing time:

- Go

- Rust

- TypeScript

//go:generate go run go.bytecodealliance.org/cmd/wit-bindgen-go generate --world hello --out gen ./wit

package main

import (

"fmt"

"net/http"

"time"

atomics "github.com/wasmcloud/wasmcloud/examples/golang/components/http-hello-world/gen/wasi/keyvalue/atomics"

store "github.com/wasmcloud/wasmcloud/examples/golang/components/http-hello-world/gen/wasi/keyvalue/store"

"go.wasmcloud.dev/component/log/wasilog"

"go.wasmcloud.dev/component/net/wasihttp"

)

func init() {

// Register the handleRequest function as the handler for all incoming requests.

wasihttp.HandleFunc(handleRequest)

}

func handleRequest(w http.ResponseWriter, r *http.Request) {

logger := wasilog.ContextLogger("handleRequest")

name := "World"

if len(r.FormValue("name")) > 0 {

name = r.FormValue("name")

}

logger.Info("Greeting", "name", name)

sleep := 2 * time.Second

logger.Info(fmt.Sprintf("Sleep for %v to simulate longer processing time", sleep))

time.Sleep(sleep)

kvStore := store.Open("default")

if err := kvStore.Err(); err != nil {

w.Write([]byte("Error: " + err.String()))

return

}

value := atomics.Increment(*kvStore.OK(), name, 1)

if err := value.Err(); err != nil {

w.Write([]byte("Error: " + err.String()))

return

}

logger.Info(fmt.Sprintf("Replying greeting 'Hello x%d, %s!'", *value.OK(), name))

fmt.Fprintf(w, "Hello x%d, %s!\n", *value.OK(), name)

}

// Since we don't run this program like a CLI, the `main` function is empty. Instead,

// we call the `handleRequest` function when an HTTP request is received.

func main() {}use wasmcloud_component::http::ErrorCode;

use wasmcloud_component::wasi::keyvalue::*;

use wasmcloud_component::{http, info};

use std::{thread, time};

struct Component;

http::export!(Component);

impl http::Server for Component {

fn handle(

request: http::IncomingRequest,

) -> http::Result<http::Response<impl http::OutgoingBody>> {

let (parts, _body) = request.into_parts();

let query = parts

.uri

.query()

.map(ToString::to_string)

.unwrap_or_default();

let name = match query.split("=").collect::<Vec<&str>>()[..] {

["name", name] => name,

_ => "World",

};

info!("Greeting {name}");

let sleep = time::Duration::from_secs(2);

info!("Sleep for {} to simulate longer processing time", sleep.as_secs());

thread::sleep(sleep);

let bucket = store::open("default").map_err(|e| {

ErrorCode::InternalError(Some(format!("failed to open KV bucket: {e:?}")))

})?;

let count = atomics::increment(&bucket, &name, 1).map_err(|e| {

ErrorCode::InternalError(Some(format!("failed to increment counter: {e:?}")))

})?;

info!("Replying greeting 'Hello x{count}, {name}!'");

Ok(http::Response::new(format!("Hello x{count}, {name}!\n")))

}

}function handle(req: IncomingRequest, resp: ResponseOutparam) {

async function handle(req: IncomingRequest, resp: ResponseOutparam) {

// Start building an outgoing response

const outgoingResponse = new OutgoingResponse(new Fields());

// Access the outgoing response body

let outgoingBody = outgoingResponse.body();

{

// Create a stream for the response body

let outputStream = outgoingBody.write();

// Write to the response stream

const name = getNameFromPath(req.pathWithQuery() || '');

log('info', '', `Greeting ${name}`);

const sleep = 2000;

log('info', '', `Sleep for ${sleep} to simulate longer processing time`);

await new Promise(resolve => setTimeout(resolve, sleep));

// Increment the bucket's count

const bucket = open('default');

const count = increment(bucket, name, 1);

log('info', '', `Replying greeting - Hello x{count}, {name}!`);

{

// Create a stream for the response body

let outputStream = outgoingBody.write();

// Write hello world to the response stream

outputStream.blockingWriteAndFlush(

new Uint8Array(new TextEncoder().encode(`Hello x${count}, ${name}!\n`)),

);

// @ts-ignore: This is required in order to dispose the stream before we return

outputStream[Symbol.dispose]();

}

...The response time of our hello handler is very low. To show that requests are not processed in parallel, we need to ensure a longer response time, which we can exploit in our tests.

Because we've made changes, run wash build to compile the updated Wasm component.

wash buildStart a local wasmCloud environment (using the -d/--detached flag to run in the background):

wash up -dIf you stopped the Redis server in the previous tutorial, start it up again:

- Local Redis Server

- Docker

redis-server &docker run -d --name redis -p 6379:6379 redisManually deploy the application:

wash app deploy wadm.yamlNow we'll try to send multiple requests in parallel.

- Linux/macOS

- Windows

seq 1 10 | xargs -P0 -I {} curl --max-time 3 "localhost:8000?name=Alice"Hello x1, Alice!

curl: (28) Operation timed out after 3002 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3006 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3006 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3005 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3005 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3003 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3005 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3001 milliseconds with 0 bytes received1..10 | ForEach-Object -Parallel { curl --max-time 3 'localhost:8000?name=Alice' }Hello x1, Alice!

curl: (28) Operation timed out after 3002 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3006 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3005 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3005 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3003 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3005 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3001 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3005 milliseconds with 0 bytes received

curl: (28) Operation timed out after 3001 milliseconds with 0 bytes receivedAs you can see, only the first curl command receives the expected response in time, while all the others run into a timeout. However, if you check the logs of the wasmCloud host, you will see that multiple requests have been received and forwarded to our component one after the other.

2024-10-20T19:29:30.897232Z INFO log: wasmcloud_host::wasmbus::handler: Greeting Alice component_id="rust_hello_world-http_component" level=Level::Info context=""

2024-10-20T19:29:30.897253Z INFO log: wasmcloud_host::wasmbus::handler: Sleep for 2 to simulate longer processing time component_id="rust_hello_world-http_component" level=Level::Info context=""

2024-10-20T19:29:32.905355Z INFO log: wasmcloud_host::wasmbus::handler: Replying greeting 'Hello x1, Alice!' component_id="rust_hello_world-http_component" level=Level::Info context=""

2024-10-20T19:29:32.906138Z INFO log: wasmcloud_host::wasmbus::handler: Greeting Alice component_id="rust_hello_world-http_component" level=Level::Info context=""

2024-10-20T19:29:32.906258Z INFO log: wasmcloud_host::wasmbus::handler: Sleep for 2 to simulate longer processing time component_id="rust_hello_world-http_component" level=Level::Info context=""

2024-10-20T19:29:34.914152Z INFO log: wasmcloud_host::wasmbus::handler: Replying greeting 'Hello x2, Alice!' component_id="rust_hello_world-http_component" level=Level::Info context=""

2024-10-20T19:29:34.914992Z INFO log: wasmcloud_host::wasmbus::handler: Greeting Alice component_id="rust_hello_world-http_component" level=Level::Info context=""

2024-10-20T19:29:34.915023Z INFO log: wasmcloud_host::wasmbus::handler: Sleep for 2 to simulate longer processing time component_id="rust_hello_world-http_component" level=Level::Info context=""

2024-10-20T19:29:36.923568Z INFO log: wasmcloud_host::wasmbus::handler: Replying greeting 'Hello x3, Alice!' component_id="rust_hello_world-http_component" level=Level::Info context=""

2024-10-20T19:29:36.924326Z INFO log: wasmcloud_host::wasmbus::handler: Greeting Alice component_id="rust_hello_world-http_component" level=Level::Info context=""

2024-10-20T19:29:36.924351Z INFO log: wasmcloud_host::wasmbus::handler: Sleep for 2 to simulate longer processing time component_id="rust_hello_world-http_component" level=Level::Info context=""

2024-10-20T19:29:38.933227Z INFO log: wasmcloud_host::wasmbus::handler: Replying greeting 'Hello x4, Alice!' component_id="rust_hello_world-http_component" level=Level::Info context=""DEBUG or TRACE logs of the wasmCloud hostYou can also check the wasmCloud host's DEBUG or TRACE logs for more detailed information (e.g. using wash up --log-level=debug). In these logs, you can clearly see that for received requests, our hello component is instantiated sequentially.

If you wish, you can also use wash spy to check which messages the capability providers and the hello component have received and sent.

wash spy --experimental hello_world-http_componentAfter multiple requests were received but timed out, it is no longer possible to send further requests to our hello application. The reason for this is that the httpserver capability provider is no longer able to invoke the hello component via NATS. To continue, we must first delete and redeploy our application.

To receive multiple requests in parallel, we need to instruct wasmCloud to scale our component according to the incoming load. WebAssembly components can be easily scaled due to the small size and portability of the binaries as well as wasmtime's ability to efficiently instantiate multiple instances of a given component.

We leverage these aspects to make it simple to scale your applications with wasmCloud. Components only use resources when they're actively processing requests, so you can specify the number of instances you want to run and wasmCloud will automatically scale up and down to meet demand.

Let's allow our application to scale up to 100 instances simultaneously by editing the application manifest in wadm.yaml:

apiVersion: core.oam.dev/v1beta1

kind: Application

metadata:

name: hello-world

annotations:

description: 'HTTP hello world demo'

spec:

components:

- name: http-component

type: component

properties:

image: file://./build/http_hello_world_s.wasm

traits:

- type: spreadscaler

properties:

instances: 1 // [!code --]

# Update the scale to 100

instances: 100

...Our application is ready to be deployed again with up to 100 instances, meaning it can handle up to 100 simultaneous incoming HTTP requests.

Deploy the application again:

wash app deploy wadm.yamlNow wasmCloud will be able to automatically scale the component according to the incoming load. Let's try sending multiple requests in parallel again.

- Linux/macOS

- Windows

seq 1 10 | xargs -P0 -I {} curl --max-time 3 "localhost:8000?name=Bob"Hello x1, Bob!

Hello x2, Bob!

Hello x3, Bob!

Hello x5, Bob!

Hello x4, Bob!

Hello x6, Bob!

Hello x8, Bob!

Hello x7, Bob!

Hello x9, Bob!

Hello x10, Bob!> 1..10 | ForEach-Object -Parallel { curl --max-time 3 'localhost:8000?name=Bob' }Hello x1, Bob!

Hello x2, Bob!

Hello x3, Bob!

Hello x5, Bob!

Hello x4, Bob!

Hello x6, Bob!

Hello x8, Bob!

Hello x7, Bob!

Hello x9, Bob!

Hello x10, Bob!As we've seen, if a component receives too many requests in parallel, it may break down and wasmCloud will not be able to forward further requests. Therefore, it is important to plan and manage the specified maximum number of concurrent instances for Spreadscaler components according to the expected load.

When you're done with the application, delete it from the wasmCloud environment:

wash app delete hello-worldShut down the environment:

wash downStop your Redis server:

- Local Redis Server

- Docker

Enter CTRL+C.

redis-cli shutdowndocker stop redisDistribute Globally 🌍

No matter where your components and capability providers run, they can seamlessly communicate on the lattice. This means you can deploy your components to any cloud provider, edge location, or even on-premises and they will be able to communicate with each other. This is possible because wasmCloud uses NATS as its messaging layer, which is a lightweight, high-performance, and secure messaging system that can be deployed anywhere.

Distributing your application just requires updating your manifest to include spread information and making sure there are available wasmCloud hosts in the desired locations. We can update our hello application to run in multiple locations by editing wadm.yaml:

apiVersion: core.oam.dev/v1beta1

kind: Application

metadata:

name: hello-world

annotations:

description: 'HTTP hello world demo'

spec:

components:

- name: http-component

type: component

properties:

image: file://./build/http_hello_world_s.wasm

traits:

- type: spreadscaler

properties:

instances: 100

# Ensure the component is spread across multiple zones

# Of the 100 replicas, place 80% in us-east-1 and 20% in us-west-1

spread:

- name: eastcoast

weight: 80

requirements:

zone: us-east-1

- name: westcoast

weight: 20

requirements:

zone: us-west-1These requirements will ensure that 80% of the replicas are placed in us-east-1 and 20% in us-west-1. You can specify any number of zones and weights to distribute your components across multiple locations. Each requirement matches directly with a label that must be present on a host, and your currently launched host doesn't have any specified labels. Let's launch two more hosts with the required labels, and then deploy our new application.

If you already deployed your application, it will be placed in the Failed state because the requirements are not met. As soon as we launch the hosts with the required labels, the application will be automatically rescheduled.

wash up --multi-local --label zone=us-east-1 -d

wash up --multi-local --label zone=us-west-1 -dJust run wash app deploy wadm.yaml again, wasmCloud will be configured to automatically distribute your component across multiple locations based on the spread requirements. You can see all of your hosts and the components running on them by running wash get inventory.

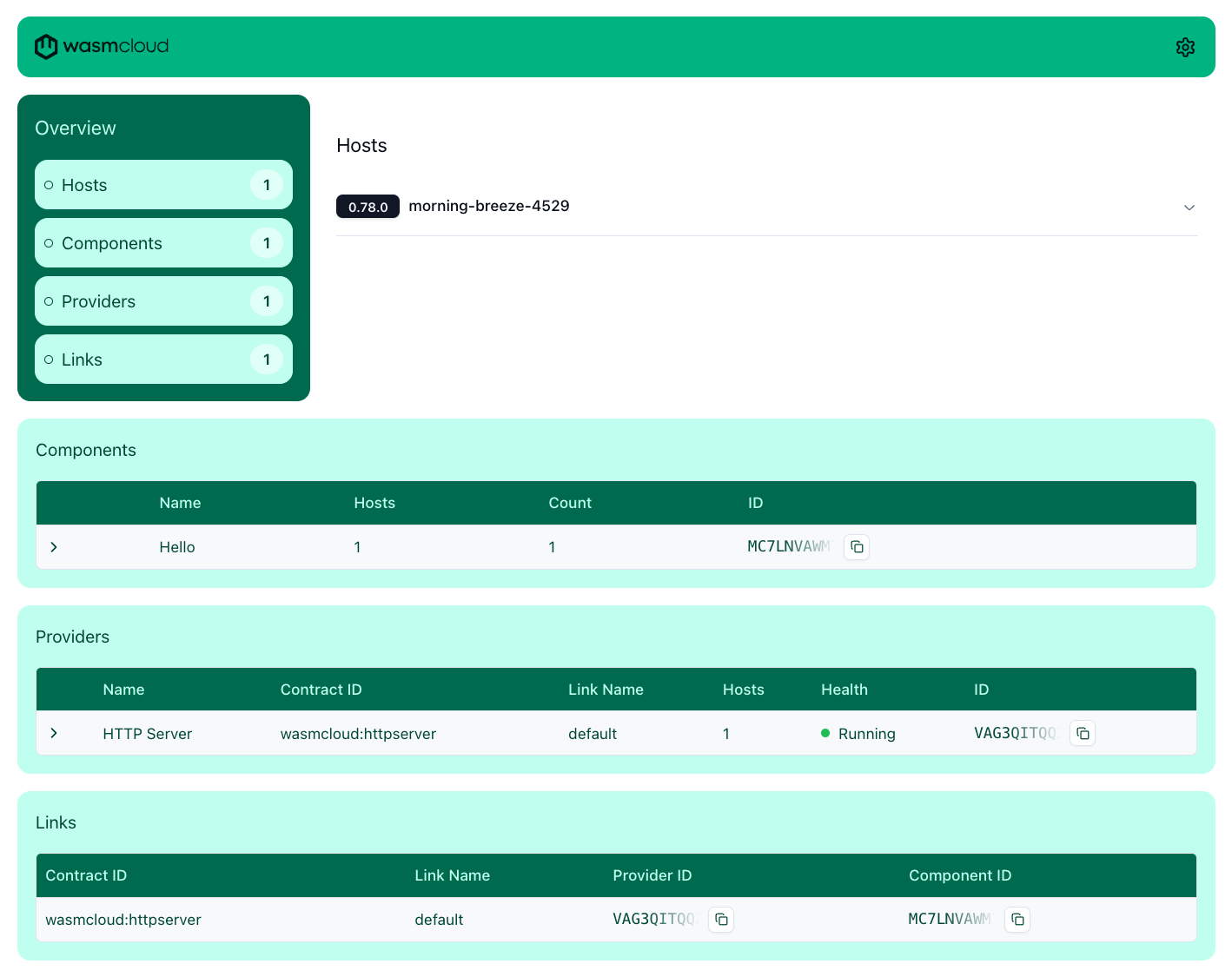

View the wasmCloud dashboard

You can start the wasmCloud dashboard using the wash.

wash uiIf you would like to change the port that the websocket is enabled on, you can pass the --nats-websocket-port option to wash up.

Note that you will need to stop NATS if it is already running or the new config will not apply. You can do this with wash down --all to stop everything.

wash up --nats-websocket-port 4444 # defaults to 4223Treat wasmCloud hosts as commodity. Stopping your host will stop all of the components running on it, and wadm will look for other available hosts to reschedule your application on. As soon as you launch your new host with the --nats-websocket-port option, your application will be rescheduled on it and work just as it did before.

Then, you can launch the wasmCloud dashboard using wash ui, which will launch the dashboard on http://localhost:3030.

This is a great way to visualize what is running on your host, even multiple hosts that are connected to the same NATS server.

Summary

Now you've learned how to scale your applications with wasmCloud and distribute them across multiple locations. You can use this same approach to scale and distribute any wasmCloud application based on load and location requirements. Read on to the next page to learn more about the benefits of wasmCloud and how to continue to develop after this quickstart.